(or How I implemented an unanswered question tracker and began to grasp the size of the site.)

I'm not sure when it happened, but Math.StackExchange is huge. I remember a distant time when you could, if you really wanted to, read all the traffic on the site. I wouldn't recommend trying that anymore.

I didn't realize just how vast MSE had become until I revisited a chatroom dedicated to answering old, unanswered questions, The Crusade of Answers. The users there especially like finding old, secretly answered questions that are still on the unanswered queue – either because the answers were never upvoted, or because the answer occurred in the comments. Why do they do this? You'd have to ask them.

But I think they might do it to reduce clutter.

Sometimes, I want to write a good answer to a good question (usually as a thesis-writing deterrence strategy).

And when I want to write a good answer to a good question, I often turn to the unanswered queue. Writing good answers to already-well-answered questions is, well, duplicated effort. [Worse is writing a good answer to a duplicate question, which is really duplicated effort. The worst is when the older version has the same, maybe an even better answer]. The front page passes by so quickly and so much is answered by really fast gunslingers. But the unanswered queue doesn't have that problem, and might even lead to me learning something along the way.

In this way, reducing clutter might help Optimize for Pearls, not Sand. [As an aside, having a reasonable, hackable math search engine would also help. It would be downright amazing]

And so I found myself back in The Crusade of Answers chat, reading others' progress on answering and eliminating the unanswered queue. I thought to myself How many unanswered questions are asked each day? So I wrote a script that updates and ultimately feeds The Crusade of Answers with the number of unanswered questions that day, and the change from the previous day at around 6pm Eastern US time each day.

I had no idea that 166 more questions were asked than answered on a given day. There are only four sites on the StackExchange network

that get 166 questions per day (SO, MathSE, AskUbuntu, and SU, in order from big to small). Just how big are we getting? The rest of this post is all about trying to understand some of our growth through statistics and pretty pictures.

I figure if you've made it this far, you're not afraid of numbers. But before we dive in, I should mention that everything here is taken from the data explorer and the SE Data Dumps. The plots are generated in plot.ly and ported here. The colorscheme is roughly based on the colorscheme of MathSE, with whites, reds, and blues.

Let's start with the most fundamental aspect: the questions asked.

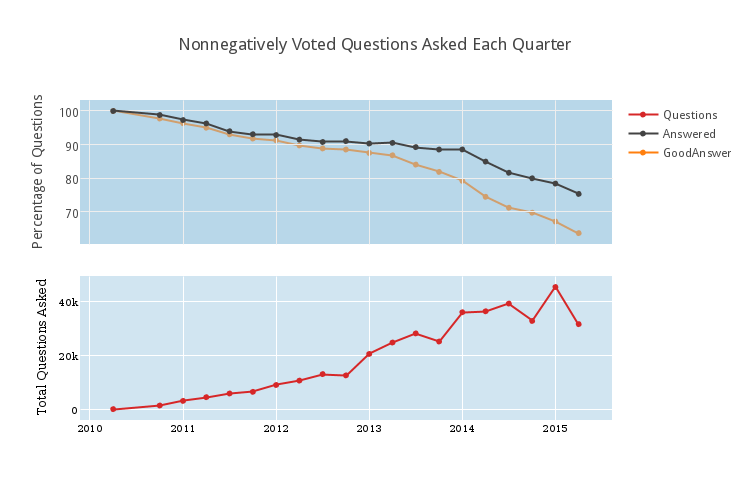

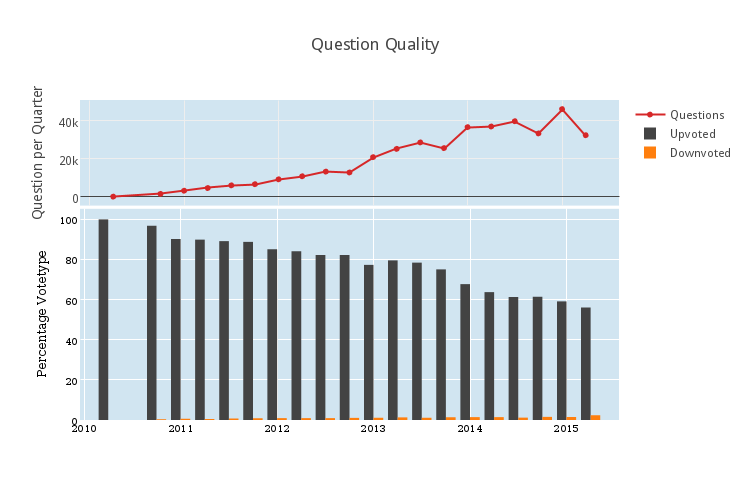

At the bottom is the total number of questions that now have score at least 0 that were asked each quarter. Community-Wiki questions are not included. In the first quarter, exactly 1 question was asked (I'll assume it was a very important question). Last quarter, about 45000 questions were asked. The dip at the end is because this quarter is still in progress (this will be common). At top is a related graph, indicating the percentage of these questions that are answered (in black) or have answers with score at least 1 (in orange, and mysteriously behind the axis lines). It is extremely important to realize that the top graph is normalized so that the bottom of the axis is at about 65%.

It's also important to realize that sometimes it takes months for a question to be answered. The questions from 2014 and before have had this time, but the more recent questions haven't. So the precipitous-looking dip in the percentage of questions with a good answer is a bit "artificially lower" at the end than it should be. It might be reasonable to assume that approximately 75-80 percent of questions with $\geq 0$ votes stand to get upvoted answers. It also seems likely (to me) that a large amount of answered-but-not-positively-answered questions actually have good answers, but they just weren't upvoted. This is more difficult to classify now. [But not impossible. The Data includes the dates of votes, so we could see how long it takes for posts to get upvoted in general].

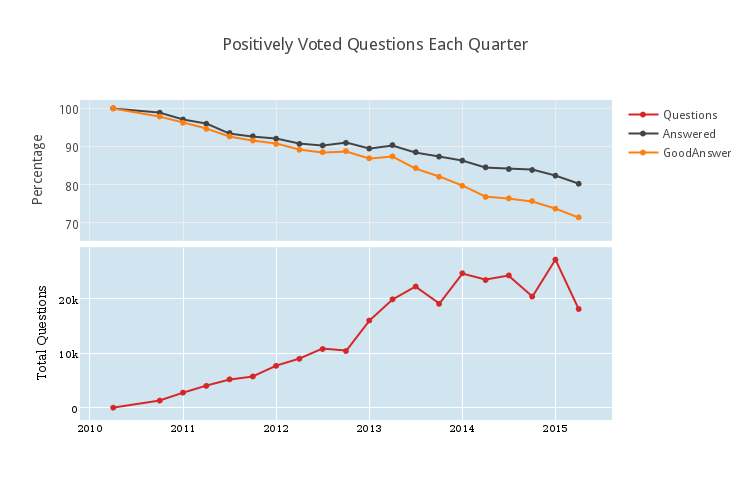

If we only look at positively voted questions (Optimize for Pearls, right?), we get the following.

It looks like being positively upvoted corresponds with a roughly 10 percent increase in the probability to receive a "good answer" in the short term, but for questions more than a year old, this pattern doesn't hold. Perhaps it's good that so many answerers are willing to help people independently of the score on the question. This is a bit foreign to me, since if I answer a question, I almost always upvote it; my threshold for taking the time to answer someone else's question is much higher than my threshold to upvote the question. Remember to Vote Early, and Vote Often!

It's also remarkable that there are so many zero-score questions. There were almost 20000 zero-score questions asked in the first quarter of 2014. This prompts the following question: how has question quality changed over time?

Let's suppose that a question is "good" if it is upvoted, and a question is 'bad" if it is downvoted. Then in the plot bar graph above, we see how many good (in black) and how many bad (in orange) questions were asked as a percentage of the total number of questions (repeated in the subplot above). Two major trends are apparent from this plot. Firstly, it's important to notice what's not there, which is very many downvoted questions. Is that because no one asks downvoted questions? No. It's because downvoted questions get closed, and then deleted, and my analysis does not included deleted questions. So if a question is bad enough to get downvoted, it seems it's usually bad enough to get deleted.

Secondly, more and more questions are receiving fewer and fewer votes. We're nearing 50% of questions being asked receiving positive vote total. And that's scary. Is question quality going down? Are fewer users voting?

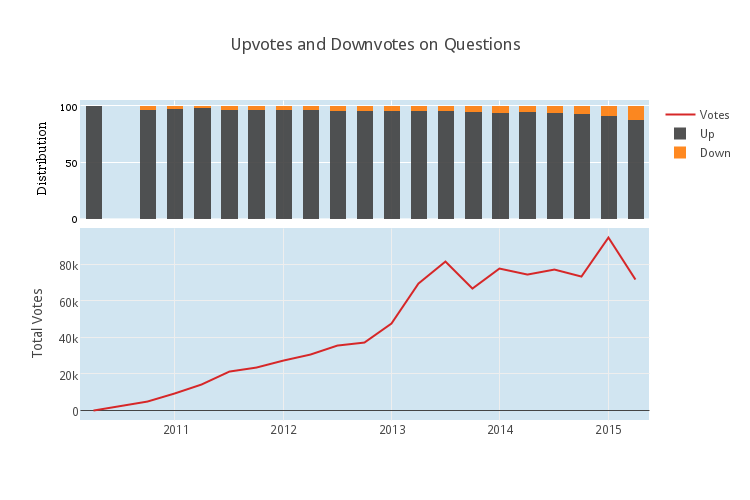

Let's look to see if more and more votes are being spent on downvotes. The following plot, very related to the plot above, compares the type of each question vote, that is the total number of upvotes to the total number of downvotes.

On the bottom, we have the total number of question votes per quarter. Notice, it looks very similar to the number of questions per quarter. This isn't a surprise. It also looks like the voting distribution is changing only slightly. (Recall that the most recent downvoted posts haven't yet had the full time to be seen by many people and ultimately get deleted, so there is a slight bias in recent quarters towards downvote. It might be nice to come back in a year and check again to see how this heuristic stands).

On the other hand, it might be a surprise that there are so few votes per question on average.

In fact, I'd go so far as to make the evaluative statement that there are too few votes per question on average, and I would prefer if users both upvoted good posts more and downvoted bad posts more. This would have several positive impacts. Answered-but-not-upvoted questions would fall, it would be easier to find good interesting-but-unanswered questions, and fewer bad questions would stand idly.

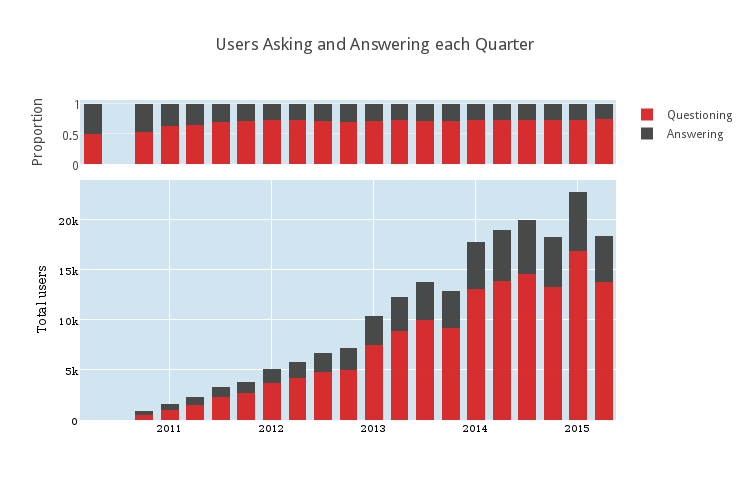

How many people ask questions compared to how many people answer questions?

This shows the number of users who answer at least one question in each quarter compared to the number of users who asked at least one question. There were some choices to be made here in presentation. I chose to stack the charts. This makes clear the fact that many, many more people ask questions than answer questions. It's been about 3 to 1 for the last... well, almost always. The top subplot shows the proportion of the number of question-askers to the number of answer-givers. It's small because it's remarkably stationary. Although the numbers are changing, the ratio of 3 question-askers for each answer-giver has been pretty steady for the last 3 years.

Places for more investigation

It would be interesting to follow this up with statistics on how many people ask at least 2 questions in a quarter compared to those that answer at least 2 questions, and so on. It would also be interesting to see if higher and higher voted questions correlate with higher and higher good-answer percentages. There are many avenues for more statistical exploration, but I leave those behind.

As it happens, I'm currently investigating other statistical phenomena through Math.StackExchange. The most interesting project, I think, is something I'm working on with a computational linguist grad student. It's about designing machine learning processes to automatically understand certain parts of questions and answers. For instance, can we reliably and automatically understand what references are being suggested? A natural follow-up is, can we determine whether different questions are related based on having similar reference-requests? Another similar project is about automatically detecting duplicates in reference-request questions. [We're looking at reference requests because they should provide an easy starting point. Answers frequently link to the reference, for instance. So if this is very hard, it's an indicator that we won't be using machine learning for much more. If it's totally fine, then we will]. But that's just in our free time.

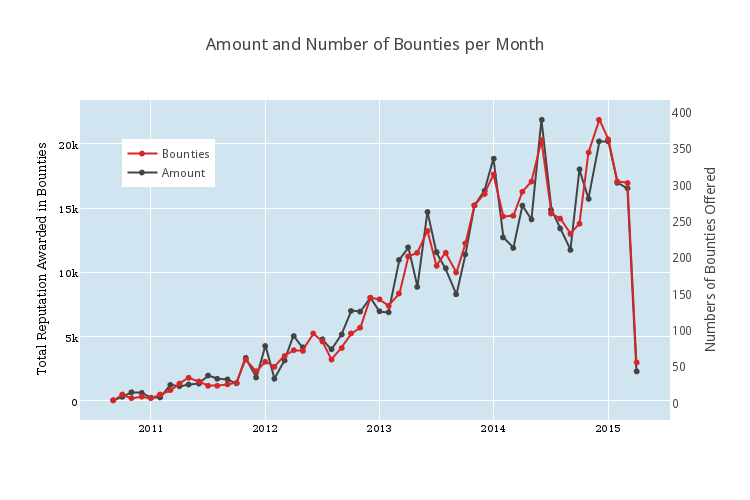

If you've made it this far, have a bonus graph. Here are the number (in black) and amount of reputation (in red) of bounties offered each month.

This appears both on the MathStackexchange Community Blog and on the author's personal website.

Info on how to comment

To make a comment, please send an email using the button below. Your email address won't be shared (unless you include it in the body of your comment). If you don't want your real name to be used next to your comment, please specify the name you would like to use. If you want your name to link to a particular url, include that as well.

bold, italics, and plain text are allowed in comments. A reasonable subset of markdown is supported, including lists, links, and fenced code blocks. In addition, math can be formatted using

$(inline math)$or$$(your display equation)$$.Please use plaintext email when commenting. See Plaintext Email and Comments on this site for more. Note also that comments are expected to be open, considerate, and respectful.